Because you are constantly listening to the feedback from your customer, you are iterating, innovating, and improving your applications and infrastructures. You continually modify your IT systems in the cloud. And let’s face it, changing something in a working system risks breaking things or introducing side effects that are sometimes unpredictable; it doesn’t matter how many tests you do. On the other hand, not making changes is stasis, followed by irrelevance, followed by death.

This is why organizations of all sizes and types have embraced a culture of controlling changes. Some organizations adopt change management processes such as the ones defined in ITIL v4. Some have adopted DevOps’ Continuous Deployment, or other methods. In any case, to support your change management processes, it is important to have tools.

Today, we are launching AWS Systems Manager Change Manager, a new change management capability for AWS Systems Manager. It simplifies the way ops engineers track, approve, and implement operational changes to their application configurations and infrastructures.

Using Change Manager has two primary advantages. First, it can improve the safety of changes made to application configurations and infrastructures, reducing the risk of service disruptions. It makes operational changes safer by tracking that only approved changes are being implemented. Secondly, it is tightly integrated with other AWS services, such as AWS Organizations and AWS Single Sign-On, or the integration with the Systems Manager change calendar and Amazon CloudWatch alarms.

Change Manager provides accountability with a consistent way to report and audit changes made across your organization, their intent, and who approved and implemented them.

Change Manager works across AWS Regions and multiple AWS accounts. It works closely with Organizations and AWS SSO to manage changes from a central point and to deploy them in a controlled way across your global infrastructure.

Terminology

You can use AWS Systems Manager Change Manager on a single AWS account, but most of the time, you will use it in a multi-account configuration.

The way you manage changes across multiple AWS accounts depends on how these accounts are linked together. Change Manager uses the relationships between your accounts defined in AWS Organizations. When using Change Manager, there are three types of accounts:

- The management account – also known as the “main account” or “root account.” The management account is the root account in an AWS Organizations hierarchy. It is the management account by virtue of this fact.

- The delegated administrator account – A delegated administrator account is an account that has been granted permission to manage other accounts in Organizations. In the Change Manager context, this is the account from which change requests will be initiated. You will typically log in to this account to manage templates and change requests. Using a delegated administrators account allows you to limit connections made to the root account. It also allows you to enforce a least privileges policy by using a specific subset of permissions required by the changes.

- The member accounts – Member accounts are accounts that are not the management account or a delegated administrator account, but are still included in Organizations. In my mental model for Change Manager, these would be the accounts that hold the resources where changes are deployed. A delegated administrator account would initiate a change request that would impact resources in a member account. System administrators are discouraged from logging directly into these accounts.

Let’s see how you can use AWS Systems Manager Change Manager by taking a short walk-through demo.

One-Time Configuration

In this scenario, I show you how to use Change Manager with multiple AWS accounts linked together with Organizations. If you are not interested in the one-time configuration, jump to the Create a Change Request section below.

There are four one-time configuration actions to take before using Change Manager: one action in the root account and three in the delegated administrator account. In the root account, I use Quick Setup to define my delegated administrator account and initially configure permissions on the accounts. In the delegated administrator account, you define your source of user identities, you define what users have permissions to approve change templates, and you define a change request template.

First, I ensure I have an Organization in place and my AWS accounts are organized in Organizational Units (OU). For the purpose of this simple example, I have three accounts: the root account, the delegated administrator account in the management OU and a member account in the managed OU. When ready, I use Quick Setup on the root account to configure my accounts. There are multiple paths leading to Quick Setup; for this demo, I use the blue banner on top of the Quick Setup console, and I click Setup Change Manager.

On the Quick Setup page, I enter the ID of the delegated administrator account if I haven’t defined it already. Then I choose the permissions boundaries I grant to the delegated administrator account to perform changes on my behalf. This is the maximum permissions Change Manager receives to make changes. I will further restrict this permission set when I create change requests in a few minutes. In this example, I grant Change Manager permissions to call any ec2 API. This effectively authorizes Change Manager to only run changes related to EC2 instances.

Lower on the screen, I choose the set of accounts that are targets for my changes. I choose between Entire organization or Custom to select one or multiple OUs.

After a while, Quick Setup finishes configuring my AWS accounts permission and I can move to the second part of the one-time setup.

Second, I switch to my delegated administrator account. Change Manager asks me how I manage users in my organization: with AWS Identity and Access Management (IAM) or AWS Single Sign-On? This defines where Change Manager pulls user identities when I choose approvers. This is a one-time configuration option. This can be changed at any time in the Change Manager Settings page.

Third, on the same page, I define an Amazon Simple Notification Service (SNS) topic to receive notifications about template reviews. This channel is notified any time a template is created or modified, to let template approvers review and approve templates. I also define the IAM (or SSO) user with permission to approve change templates (more about these in one minute).

Optionally, you can use the existing AWS Systems Manager Change Calendar to define the periods where changes are not authorized, such as marketing events or holiday sales.

Finally, I define a change template. Every change request is created from a template. Templates define common parameters for all change requests based on them, such as the change request approvers, the actions to perform, or the SNS topic to send notifications of progress. You can enforce the review and approval of templates before they can be used. It makes sense to create multiple templates to handle different type of changes. For example, you can create one template for standard changes, and one for emergency changes that overrides the change calendar. Or you can create different templates for different types of automation run books (documents).

To help you to get started, we created a template for you: the “Hello World” template. You can use it as a starting point to create a change request and test out your approval flow.

At any time, I can create my own template. Let’s imagine my system administrator team is frequently restarting EC2 instances. I create a template allowing them to create change requests to restart one or multiple instances. Using the delegated administrator account, I navigate to the Change Manager management console and click Create template.

In a nutshell, a template defines the list of authorized actions, where to send notifications and who can approve the change request. Actions are an AWS Systems Manager runbook. Emergency change templates allow change requests to bypass the change calendar I wrote about earlier. Under Runbook Options, I choose one or multiple runbooks allowed to run. For this example, I choose the AWS EC2RestartInstance runbook.

I use the console to create the template, but templates are defined internally as YAML. I can edit the YAML using the Editor tab, or when I am using the AWS Command Line Interface (CLI) or API. This means I can version control them just like the rest of my infrastructure (as code).

Just below, I document my template using text formatted as markdown format. I use this section to document the defining characteristics of the template and provide any necessary instructions, such as back-out procedures, to the requestor.

I scroll down that page and click Add Approver to define approvers. Approvers can be individual users or groups. The list of approvers are defined either at the template level or in the change request itself. I also choose to create an SNS topic to inform approvers when any requests are created that require their approval.

In the Monitoring section I select the alarm that, when active, stops any change based on this template, and initiate a rollback.

In the Notifications section, I select or create another SNS topic so I’m notified when status changes for this template occur.

Once I am done, I save the template and submit it for review.

Templates have to be reviewed and approved before they can be used. To approve the template, I connect the console as the template_approver user I defined earlier. As template_approver user, I see pending approvals on the Overview tab. Or, I navigate to the Templates tab, select the template I want to review. When I am done reviewing it, I click Approve.

Voila, now we’re ready to create change requests based on this template. Remember that all the preceding steps are one-time configurations and can be amended at any time. When existing templates are modified, the changes go through a review and approval process again.

Create a Change Request

To create a change request on any account linked to the Organization, I open a AWS Systems Manager Change Manager console from the delegated administrator account and click Create request.

I choose the template I want to use and click Next.

I enter a name for this change request. The change is initiated immediately after all approvals are granted, or I specify an optional scheduled time. When the template allows me, I choose the approver for this change. In this example, the approver is defined by the template and cannot be changed. I click Next.

I enter a name for this change request. The change is initiated immediately after all approvals are granted, or I specify an optional scheduled time. When the template allows me, I choose the approver for this change. In this example, the approver is defined by the template and cannot be changed. I click Next.

On the next screen, there are multiple important configuration options, relating to the actual execution of the change:

- Target location – lets me define on which target AWS accounts and AWS Region I want to run this change.

- Deployment target – lets me define which resources are the target of this change. One EC2 instance? Or multiple ones identified by their tags, their resources groups, a list of instance IDs, or all EC2 instances.

- Runbook parameters – lets me define the parameters I want to pass to my runbook, if any.

- Execution role – lets me define the set of permissions I grant the System Manager to deploy with this change. The permission set must have service

changemanagement.ssm.amazonaws.com as principal for the trust policy. Selecting a role allows me to grant the Change Manager runtime a different permission set than the one I have.

Here is an example allowing Change Manager to stop an EC2 instance (you can scope it down to a specific AWS account, specific Region, or specific instances):

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:StartInstances",

"ec2:StopInstances"

],

"Resource": "*",

},

{

"Effect": "Allow",

"Action": "ec2:DescribeInstances",

"Resource": "*"

}

]

}

And the associated trust policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "changemanagement.ssm.aws.internal"

},

"Action": "sts:AssumeRole"

}

]

}

When I am ready, I click Next. On the last page, I review my data entry and click Submit for approval.

At this stage, the approver receives a notification, based on the SNS topic configured in the template. To continue this demo, I sign out of the console and sign in again as the cr_approver user, which I created, with permission to view and approve change requests.

As the cr_approver user, I navigate to the console, review the change request, and click Approve.

The change request status switches to scheduled, and eventually turns green to Success. At any time, I can click the change request to get the status, and to collect errors, if any.

I click on the change request to see the details. In particular, the Timeline tab shows the history of this CR.

Availability and Pricing

AWS Systems Manager Change Manager is available today in all commercial AWS Regions, except mainland China. The pricing is based on two dimensions: the number of change requests you submit and the total number of API calls made. The number of change requests you submit will be the main cost factor. We will charge $0.29 per change request. Check the pricing page for more details.

You can evaluate Change Manager for free for 30 days, starting on your first change request.

As usual, let us know what you think and let’s get started today

-- seb Via AWS News Blog https://ift.tt/1EusYcK

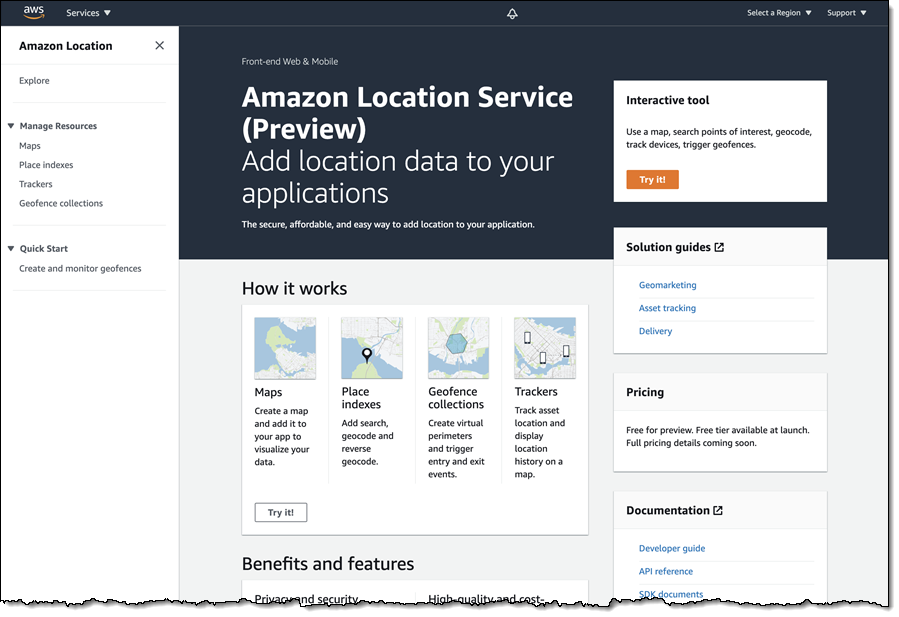

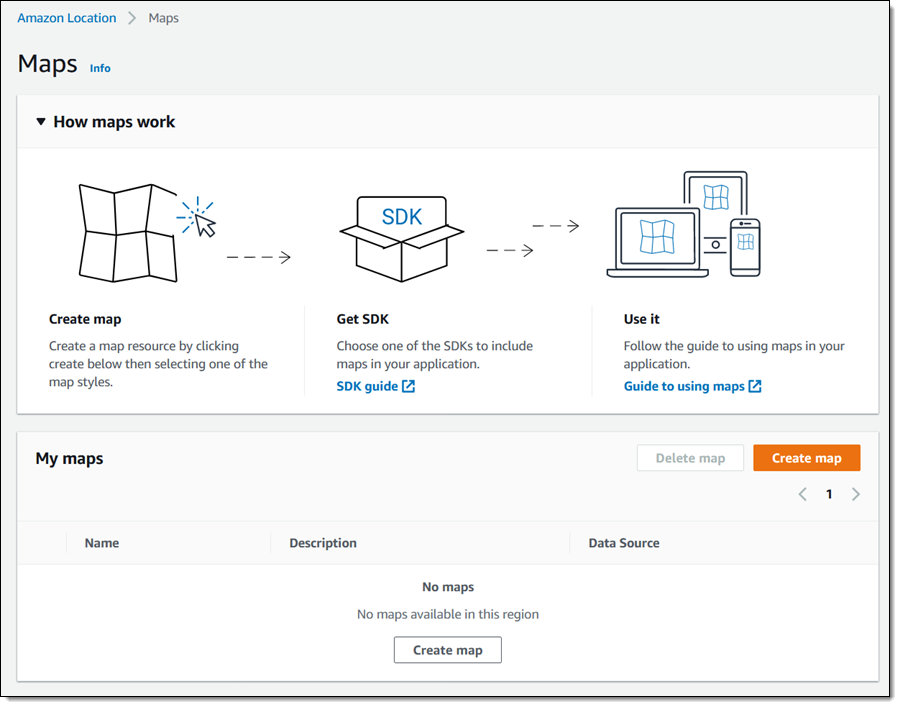

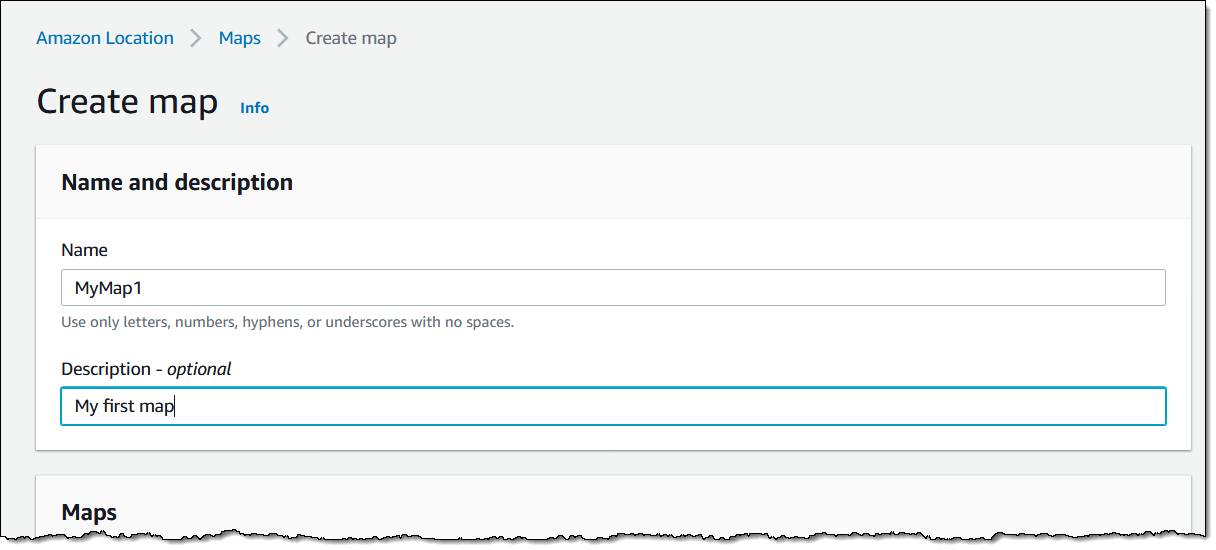

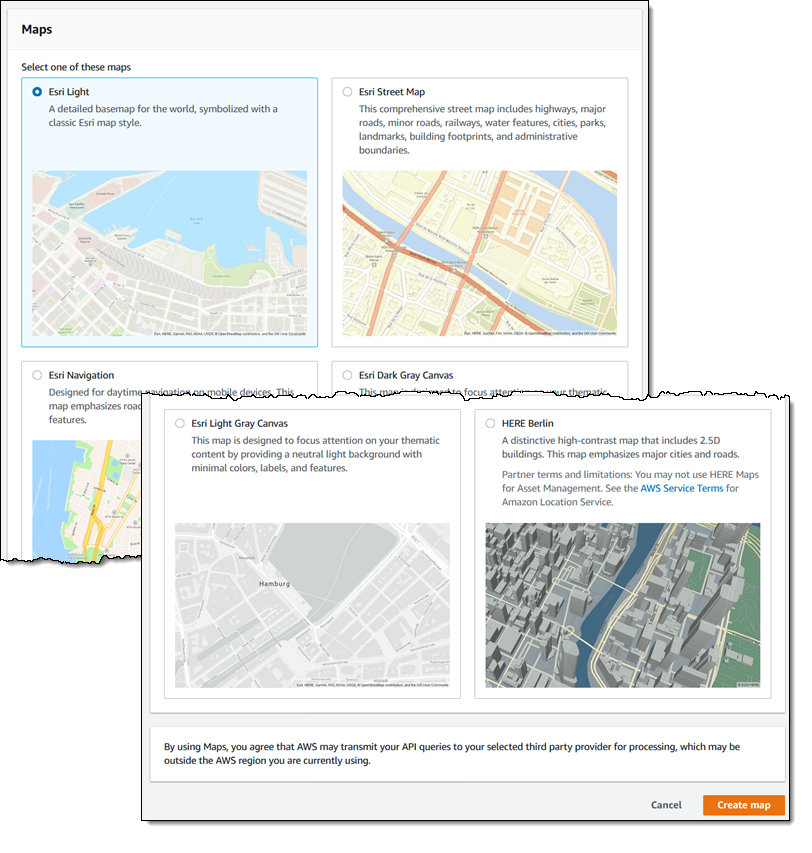

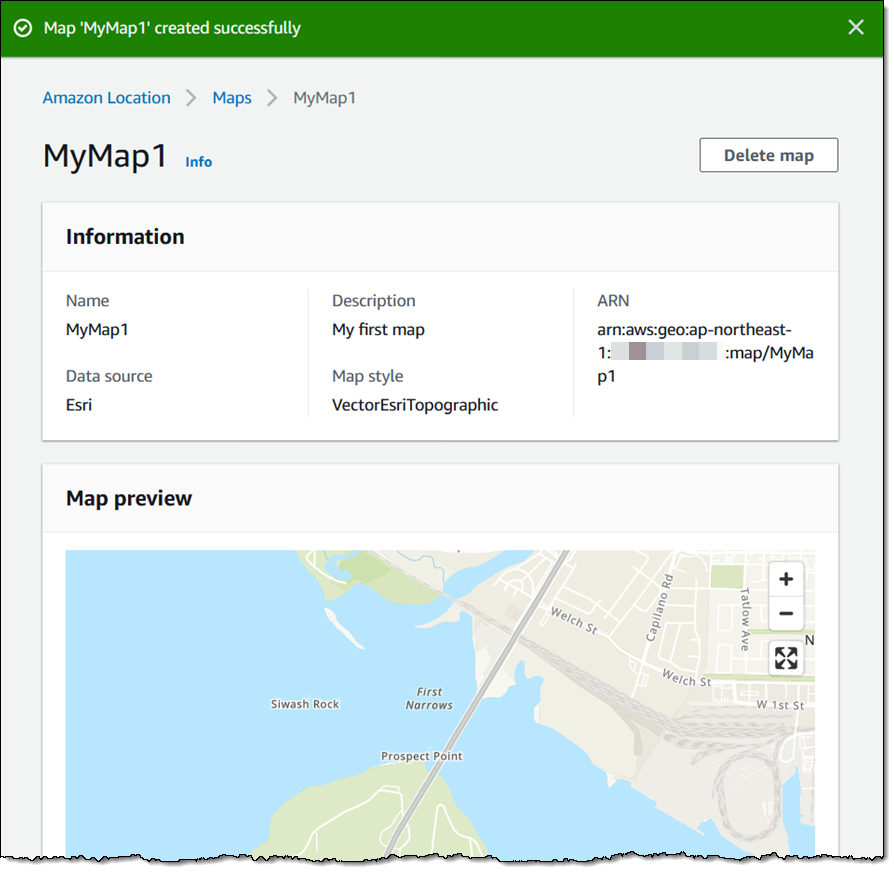

We want to make it easier and more cost-effective for you to add maps, location awareness, and other location-based features to your web and mobile applications. Until now, doing this has been somewhat complex and expensive, and also tied you to the business and programming models of a single provider.

We want to make it easier and more cost-effective for you to add maps, location awareness, and other location-based features to your web and mobile applications. Until now, doing this has been somewhat complex and expensive, and also tied you to the business and programming models of a single provider.

Embedded developers at original equipment manufacturers (OEMs) and MCU vendors using FreeRTOS to build long-lived applications on IoT devices now get the predictability and feature stability of an LTS release without compromising access to critical security updates. The FreeRTOS 202012.00 LTS release applies to the FreeRTOS kernel, connectivity libraries (FreeRTOS+TCP, coreMQTT, coreHTTP), security library (PKCS #11 implementation), and AWS library (

Embedded developers at original equipment manufacturers (OEMs) and MCU vendors using FreeRTOS to build long-lived applications on IoT devices now get the predictability and feature stability of an LTS release without compromising access to critical security updates. The FreeRTOS 202012.00 LTS release applies to the FreeRTOS kernel, connectivity libraries (FreeRTOS+TCP, coreMQTT, coreHTTP), security library (PKCS #11 implementation), and AWS library (